Deep learning is a technique used to teach machines to recognize patterns and behaviors.

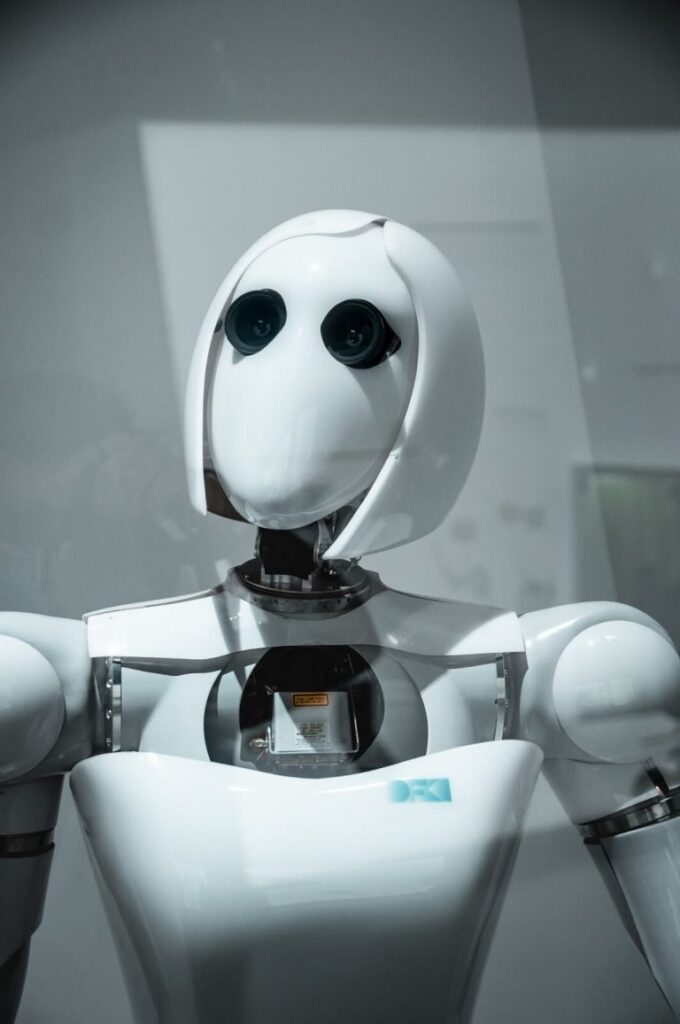

Source – Unsplash

Source – Unsplash

Deep Learning algorithms are inspired by information processing patterns found in the human brain. Instead of explicitly telling an algorithm which part or feature of data is important, we let the machine decide for itself.

Contents

What can machines do with AI/ML or Deep Learning?

Ranging from Language Translation, Object detection, Speech/Sound Detection, Prediction, etc. You can check more examples and use cases in action here.

More about Deep Learning in my previous blog here.

How to train a machine?

Deep Learning, AI all sounds cool, so how do you get started on this?

Well, before we jump into code and learn how to use various libraries, let’s first understand the process or steps of how a typical Machine learning or Deep Learning model is created.

What’s a model?

What’s a model?

Let’s say you want your machine to identify if a given image is a pancake or a crepe. This image identifying system is called a model.

Note: Not just restricted to images, it can be any type of question answering system.

Generally, there are 7 steps followed in the process of building the models.

- Gathering data

- Preparing that data

- Choosing a model

- Training

- Evaluation

- Hyperparameter tuning

- Prediction

1. Gathering the DataPancake vs Crepe

The first thing is pretty obvious we need images of pancakes and crepe, how much you ask? As many as possible but in equal proportion. We want our machine to get both categories of images in equal proportion so that one shouldn’t dominate the other.

How do we gather the data?

There are plenty of sources available on the internet where usually most of the data is available to use in production directly. Few of them are Kaggle, World Bank Open Data, Google Public Data Explorer, FiveThirtyEight, Reddit, etc.

But, what if it’s not available at a single destination? You scape the data from the web and gather it. Even if that’s impossible, then make your own data!

Fun Fact – Pancakes and crepe both look similar but pancake has a rising agent.

2. Preparing the Data

Once we gather the data, we have to do some pre-processing.

Which involves removing unrelated data, dealing with missing values, categorical data, and other forms of adjusting and manipulation. Things like de-duping, normalization, error correction, and more.

Preparing the data is most important and it’s where most of the analysts and data scientists spend their time on.

We don’t want our machine to learn from raw and inaccurate data, inaccurate data and raw data leads to poor performance and results.

Imagine a few images of pizza or waffles that come labeled as pancakes, it would confuse our machine.

Apart from cleaning, we should also perform some data manipulation and transformation such as cropping the image, rotating it, adding padding to it, and changing it to grayscale, etc.

We then split the entire data into training and validation.

We use the training data to train and evaluate the model with the validation dataset.

3. Choosing a model

The next step is choosing a model or more specifically an algorithm by which the machine learns to recognize patterns from the data, over the period of time, lot of data scientist and researchers have built various models for various kinds of data, like image, a sequence like text or audio, numerical and other text-based data.

There are models in which you start training from scratch and then there’s’ something called Transfer Learning where we take the pre-trained model and use that to train our machine on the data that we have.

4. Training

This is where the bulk of machine learning or deep learning takes place. In this step, we will use our data to train our model’s ability to predict whether a given image is pancake or crepe.

We feed the data, train the model, and compare its results with the actual results this process then repeats. Each iteration or cycle of training helps the model to recognize the patterns.

5. Evaluation

Once training is completed, we have to evaluate whether our model is any good. This is where the dataset that we kept aside earlier comes into play, we introduce our model to the data that it has never seen or used for training. This allows us to see how our model performs when introduced to real-world situations.

6. Hyperparameter tuning

Once we have evaluated the model, maybe we think our model can do better, we can tune certain parameters and see how whether it increases or decreases our model’s performance.

Some of the common hyperparameters are how many times do we want our model to go through the data, what should be the learning rate.

This hyperparameter tuning is more of an experimental process that heavily depends on the type of dataset, model, and training process.

7. Predicting

At the end of the day, we want our model to predict stuff that it’s trained for.

Once all the above process is completed we can put our model in the real world and solve problems.

We can finally use our model to predict whether the given image is a pancake or crepe.

The power of AI or ML or DL is that we trained a model to predict the given image was a pancake or crepe without human intervention or manual rules.

We can use this process to different domains as well and solve or automate a problem.